On Redis, Memcached, Speed, Benchmarks and The Toilet

Tuesday, 21 September 10

The internet is full of things: trolls, awesome programming threads, p0rn, proofs of how cool and creative the human beings can be, and of course crappy benchmarks. In this blog post I want to focus my attention to the latter, and at the same time I'll try to show how good methodology to perform a benchmark is supposed to be, at least from my point of view.

Stressing scalability so much put somewhat in shadow what in the 80's was one of the most interesting meters of software coolness, that is: raw speed. I mean old-fashion speed, that is, how many operations is this single thing able to perform? How many triangles can you trace in a second? How many queries can you answer? How many square roots can you compute?

Back in the initial days of home computing this was so important since CPU power was a limited resource. Now computers are way, way faster, but is still speed important, or should we just care about scalability, given that we can handle any load after all just adding more nodes? My bet is that raw speed is very important, and this are a few arguments:

You should check it yourself reading the original post, but this is why the sys/toilet benchmark is ill conceived.

I checked the implementation better and found a problem in the query parsing code that resulted in a quadratic algorithm due to a wrong positioned strchr() call. The problem is now fixed in this branch, that is what I'm using to run the benchmarks, and that will be merged in Redis master within days. After this fix large requests are now parsed five times faster than before.

But at this point I started to be curious...

Not only this, but memcached is a truly mature software, that undertook a lot of real world stress testing and changes to work well in the practice. It's definitely a good meter to have for Redis. So given the great similarity between the systems if there are huge discrepancies likely there is a bug somewhere, either in Redis or memcached. And this actually was the case with MGET! I fixed a bug thanks to the comparison of numbers with memcached.

In Redis land we already have redis-benchmark that assisted us in the course of the latest one and half years, and worked as a great advertising tool: we were able to show that our system was performing well.

Memcached and Redis protocols are not so different, both are simple ASCII protocols with similar formats. So I used a few hours of my time to port redis-benchmark to the memcached protocol. The result is mc-benchmark.

If you compare redis-benchmark and mc-benchmark with diff, you'll notice that apart from a lot of code removed since memcached does not support many of the operations that redis-benchmark was running against Redis, the only real difference in the source code is the following:

Note that mc-benchmark and redis-benchmark are designed to skip the reply so the actual processing is just the absolute minimal required to read the reply. Also note that the few lines of code added to turn redis-benchmark into mc-benchmark are inherently needed for the memcached protocol design. This means that we have an apples to apples comparison, and with the same mouth!.

As you can see there are no huge differences in the range of one order of magnitude, but surely Redis is considerably faster in this test. If you check the left side of the graph you'll notice that the speed is about the same with a single client. The more we add simultaneous clients the more Redis starts to go faster.

It's worth to note that I measured the CPU used by both the systems in a 1 million keys GET/SET test, obtaining the following results:

As a result of this new branch of investigation in the next days I'll create speed.redis.io that will be something like a continuous speed testing of the different branches of Redis, with history. This way we can be sure that our changes will not modify performances, or we can try to enhance performances while checking how the numbers will change after every commit.

Why speed matters?

In the Web Scale era everybody is much more obsessed with scalability than speed. That is, if I've something that is able to do handle a (not better defined) load of L, I want it to handle a load of L*N if I use N instances of this thing. Automagically, Fault tolerantly, Bullet proofly.Stressing scalability so much put somewhat in shadow what in the 80's was one of the most interesting meters of software coolness, that is: raw speed. I mean old-fashion speed, that is, how many operations is this single thing able to perform? How many triangles can you trace in a second? How many queries can you answer? How many square roots can you compute?

Back in the initial days of home computing this was so important since CPU power was a limited resource. Now computers are way, way faster, but is still speed important, or should we just care about scalability, given that we can handle any load after all just adding more nodes? My bet is that raw speed is very important, and this are a few arguments:

- Raw speed is bound to queries per watt. Energy is a serious problem, not just for the environment, but also cost-wise. More computers running to serve your 1000 requests per second, the bigger your monthly bill.

- Large systems are hard to handle, and hardware is not for free. If I can handle the same workload with 10 servers instead of 100 servers, everything will be cheaper and simpler to handle.

- 95% of all the web innovation today is driven by startups. Startups start very very small, they don't have budget for large powerful computers. A software system that is able to handle a reasonable amount of load with a cheap Linux box is going to win a lot of acceptance in the most fertile of the environments for new technology toys, that is, startups.

- Scalable systems many times can work better with a smaller number of nodes, so even if there is the ability to scale it's better to run a 10 nodes cluster than a 100 nodes cluster. So the single node of a scalable system should be as fast as possible.

Systems for the toilet

Apparently I'm not alone in reconsidering the importance of raw speed. The sys/toilet blog published a benchmark comparing the raw speed of Redis and Memcached. The author of this blog firmly believes that many open source programs (and not only) should better go to the toilet instead of running in real systems, since they are not good. I can't agree more with this sentiment. Unfortunately I also think that our dude is part of the problem, since running crappy benchmarks is not going to help our pop culture.You should check it yourself reading the original post, but this is why the sys/toilet benchmark is ill conceived.

- All the tests are run using a single client into a busy loop. I love '80s busy loops but... well, let's be serious. I left a comment in the blog about this problem, and the author replied that in the real world things are different and threading is hard. Sorry but I was not thinking about multi thread programming: a DB under load receives many concurrent queries by different instances of the web server(s) (that can be threads, but much more easily different Apache processes). So it is not required that your web dude starts writing concurrent software to have N queries at the same time against your DB. If your DB is under serious load, you can bet that you are serving many requests at the same time. Sorry if this is obvious for most of the readers.

- Not just the above, when you run single clients benchmarks what you are metering actually is, also: the round trip time between the client and the server, and all the other kind of latencies involved, and of course, the speed of the client library implementation.

- The test was performed with very different client libraries. For instance libmemcached accepted as input a buffer pointer and a length, while credis, the library used in the mentioned benchmark, was calling strlen() for every key. So in one benchmark there was a table of pre-computed lengths passed to the library, and in the other the lib was calling strlen() itself. This is just an example, there are much bigger parsing, buffering, and other implementation details affecting the outcome especially if you are using a single-client approach, that is perfect to meter this things instead of the actual server performance.

I checked the implementation better and found a problem in the query parsing code that resulted in a quadratic algorithm due to a wrong positioned strchr() call. The problem is now fixed in this branch, that is what I'm using to run the benchmarks, and that will be merged in Redis master within days. After this fix large requests are now parsed five times faster than before.

But at this point I started to be curious...

Redis VS Memcached

Even if Redis provides much more features than memcached, including persistence, complex data types, replication, and so forth, it's easy to say that it is an almost strict superset of memcached. There are GET and SET operations, timeouts, a networking layer, check-and-set operations and so forth. This two systems are very, very similar in their essence, so comparing the two is definitely an apple to apple comparison.Not only this, but memcached is a truly mature software, that undertook a lot of real world stress testing and changes to work well in the practice. It's definitely a good meter to have for Redis. So given the great similarity between the systems if there are huge discrepancies likely there is a bug somewhere, either in Redis or memcached. And this actually was the case with MGET! I fixed a bug thanks to the comparison of numbers with memcached.

Apple to Apple, with the same mouth

But even comparing Apples to Apples is not trivial. You need to really have the very same semantics client side, do sensible things about the testing environment, number of simulated clients and so forth.In Redis land we already have redis-benchmark that assisted us in the course of the latest one and half years, and worked as a great advertising tool: we were able to show that our system was performing well.

Memcached and Redis protocols are not so different, both are simple ASCII protocols with similar formats. So I used a few hours of my time to port redis-benchmark to the memcached protocol. The result is mc-benchmark.

If you compare redis-benchmark and mc-benchmark with diff, you'll notice that apart from a lot of code removed since memcached does not support many of the operations that redis-benchmark was running against Redis, the only real difference in the source code is the following:

@@ -213,8 +206,15 @@

char *tail = strstr(p,"\r\n");

if (tail == NULL)

return 0;

- *tail = '\0';

- *len = atoi(p+1);

+

+ if (p[0] == 'E') { /* END -- key not present */

+ *len = -1;

+ } else {

+ char *x = tail;

+ while (*x != ' ') x--;

+ *tail = '\0';

+ *len = atoi(x)+5; /* We add 5 bytes for the final "END\r\n" */

+ }

return tail+2-p;

}

Basically in the memcached protocol the length of the payload was one of the space separated arguments, while in the Redis protocol is at fixed offset (there is just a "$" character before). So there was to add a short while loop (that will scan just two bytes in our actual benchmark), and an explicit very fast test for the "END" condition of the memcached protocol.

Note that mc-benchmark and redis-benchmark are designed to skip the reply so the actual processing is just the absolute minimal required to read the reply. Also note that the few lines of code added to turn redis-benchmark into mc-benchmark are inherently needed for the memcached protocol design. This means that we have an apples to apples comparison, and with the same mouth!.

Methodology

The following is the methodology used for this test:- I used Redis master, the "betterparsing" branch to run the test. The result of the benchmark should be pretty similar to what you'll get with Redis 2.0, excluding the MGET test, that I'm not including now since it's not part of redis-benchmark currently.

- I used the latest memcached stable release, that is, memcached-1.4.5.

- Both systems were compiled with the same GCC, same optimization level (-O2).

- I disabled persistence in Redis to avoid overhead due to the forking child in the benchmark.

- I ran memcached with -m 5000 in order to avoid any kind of expire of old keys. This way both Redis and memcached were loading the whole dataset in memory.

- The test was performed against real hardware, an oldish Linux box completely idle.

- Every test was ran three times, the higher figure obtained was used.

- The size of payload was 32 bytes. Sizes of 64, 128, 1024 bytes were tested without big differences in the results. Once you start to go out of the bounds of the MTU, performances will degradate a lot in both systems.

#!/bin/bashSorry, the comparison with != "0" is surely lame but I did not remembered the right way to do it with shell scripting :)

# BIN=./redis-benchmark BIN=./mc-benchmark payload=32 iterations=100000 keyspace=100000

for clients in 1 5 10 20 30 40 50 60 70 80 90 100 200 300 do SPEED=0 for dummy in 0 1 2 do S=$($BIN -n $iterations -r $keyspace -d $payload -c $clients | grep 'per second' | tail -1 | cut -f 1 -d'.') if [ $(($S > $SPEED)) != "0" ] then SPEED=$S fi done echo "$clients $SPEED" done

Results

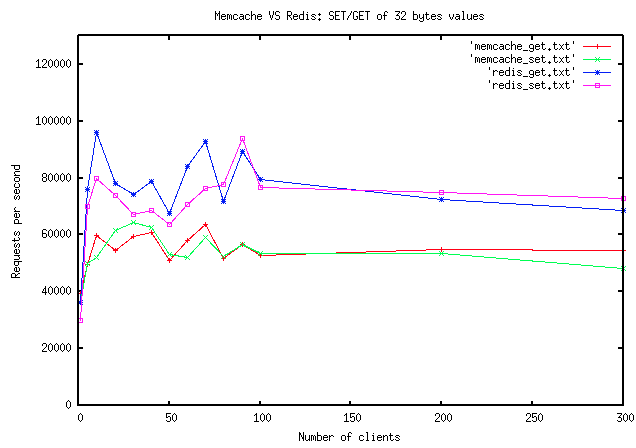

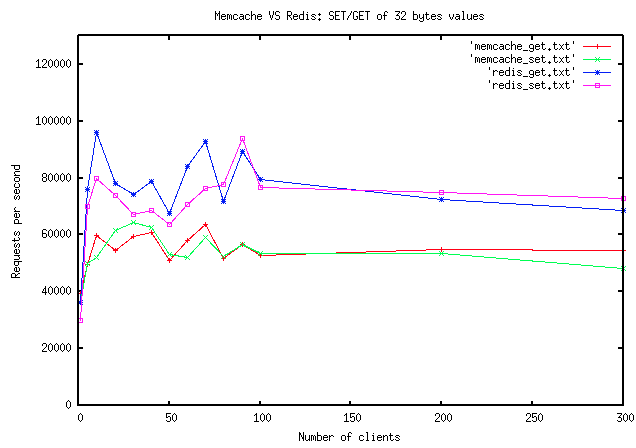

Finally... here are the results, in form of a graph generated with gnuplot:

As you can see there are no huge differences in the range of one order of magnitude, but surely Redis is considerably faster in this test. If you check the left side of the graph you'll notice that the speed is about the same with a single client. The more we add simultaneous clients the more Redis starts to go faster.

It's worth to note that I measured the CPU used by both the systems in a 1 million keys GET/SET test, obtaining the following results:

- memcache: user 8.640 seconds, system 19.370 seconds

- redis: user 8.95 seconds, system 20.59 seconds

Atomic operations can be traded for speed

To really understand what is the potential of the two systems as caching systems, there is to take into account another aspect. Redis can run complex atomic operations against hashes, lists, sets and sorted sets at the same speed it can run GET and SET operations (you can check this fact using redis-benchmark). The atomic operations and more advanced data structures allow to do much more in a single operation, avoiding check-and-set operations most of the time. So I think that once we drop the limit of just using GET and SET and uncover the full power of the Redis semantic there is a lot you can do with Redis to run a lot of concurrent users with very little hardware.Conclusions

This is a limited test that can't be interpreted as Redis is faster. It is surely faster under the tested conditions, but different real world work loads can lead to different results. For sure after this tests I've the impressions that the two systems are well designed, and that in Redis we are not overlooking any obvious optimization.As a result of this new branch of investigation in the next days I'll create speed.redis.io that will be something like a continuous speed testing of the different branches of Redis, with history. This way we can be sure that our changes will not modify performances, or we can try to enhance performances while checking how the numbers will change after every commit.

Disclaimer

I used a limited amount of time to setup and run this tests. Please if you find any kind of flaw or error drop me a note or a comment in this blog post, so that I can fix it and publish updated results.

Do you like this article?

Subscribe to the RSS feed of this blog or use the newsletter service in order to receive a notification every time there is something of new to read here.

Note: you'll not see this box again if you are a usual reader.

Subscribe to the RSS feed of this blog or use the newsletter service in order to receive a notification every time there is something of new to read here.

Note: you'll not see this box again if you are a usual reader.

Comments

1

21 Sep 10, 10:59:00

What do you call a "oldish Linux box"? I'm asking because I'd love to optimize our various redis instances, and we're not getting the same kind of performance that you get. It would greatly help to have some kind of "ckecklist" on how to have a faster redis.

21 Sep 10, 11:05:02

Julien: this is what I get from cat /proc/cpuinfo:

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 23

model name : Intel(R) Core(TM)2 Quad CPU Q8300 @ 2.50GHz

stepping : 10

cpu MHz : 1998.000

cache size : 2048 KB

So it's not so bad actually, but we bought it for 300 euros, so I guess not at the level of a decent Linux server.

About optimizations, it seems like Redis is mostly CPU bound so the faster CPU the better (trying to compile with -O3 can help as well). The other obvious part where it's worth investigating is parallelism and networking stack: network links between DB and web servers and so forth.

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 23

model name : Intel(R) Core(TM)2 Quad CPU Q8300 @ 2.50GHz

stepping : 10

cpu MHz : 1998.000

cache size : 2048 KB

So it's not so bad actually, but we bought it for 300 euros, so I guess not at the level of a decent Linux server.

About optimizations, it seems like Redis is mostly CPU bound so the faster CPU the better (trying to compile with -O3 can help as well). The other obvious part where it's worth investigating is parallelism and networking stack: network links between DB and web servers and so forth.

21 Sep 10, 11:23:04

@Pedro: unlikely to make any difference, with redis-benchmark the numbers are exactly the same. The only difference if you send an MGET with many many keys in the same command.

21 Sep 10, 13:11:38

Semantics -- you saw it coming ;-)

> if [ $(($S > $SPEED)) != "0" ]

If "S" is always a whole number (looks like that from the cut part) I think I would have gone for either:

if [ $S -ge $SPEED ]

OR

if (($S > $SPEED))

For more, see:

http://tldp.org/LDP/abs/html/comparison-ops.html#I...

WolfHumble

> if [ $(($S > $SPEED)) != "0" ]

If "S" is always a whole number (looks like that from the cut part) I think I would have gone for either:

if [ $S -ge $SPEED ]

OR

if (($S > $SPEED))

For more, see:

http://tldp.org/LDP/abs/html/comparison-ops.html#I...

WolfHumble

21 Sep 10, 14:06:41

http://en.oreilly.com/velocity2010/public/schedule... outlines a methodology to actually quantify scalability as shown with memcached and outlines problems in that software. Have a look and see if that would be useful as an approach...

21 Sep 10, 15:04:12

I agree with you that lightning fast speed is important before horizontal scaling. My guess would be people shard before they have truly optimized all of their code.

21 Sep 10, 15:17:07

@Julien my guess is you don't have persistence turned off like @antirez does since that's not the default

21 Sep 10, 21:53:33

I like the idea of speed.redis.io it'll be interesting to see the performance of redis change as features are added.

23 Sep 10, 12:35:24

Just wanted to say thank you for caring so much about speed, and for creating redis.

To me redis fills a different role than memcached and so comparing the two isn't something that I'm all that interested in.

I'm not using redis yet in any live projects, but I have used it in two projects I was working on developing and am likely to use it in future projects :)

To me redis fills a different role than memcached and so comparing the two isn't something that I'm all that interested in.

I'm not using redis yet in any live projects, but I have used it in two projects I was working on developing and am likely to use it in future projects :)

29 Sep 10, 02:22:54

Why do you consider memcached and Redis comparison an apple to apple comparison -- memcached has no guarantee that if a set was successful, the next get would return successfully too (even if expiration time is not passed and there is no process restart or network outage). Redis on the other hand must support this (if no expiration + no restart + no network outage) (correct me if I am wrong). Because of this difference memcached can use UDP while Redis can't. For that matter, I wonder if memcached with UDP would be faster than Redis.

16 Dec 10, 21:43:48

More please, this information helped me consider a few more things, keep up the good work. http://www.watcheshall.com/burberry-shoes.html

31 Jan 11, 10:03:28

Antirez,

Does release candidate 2.2 contain the fix of MGET performance you wrote about? As I was not able to find anything about improving MGET performance in release notes for neither release 2.0 nor 2.2

Does release candidate 2.2 contain the fix of MGET performance you wrote about? As I was not able to find anything about improving MGET performance in release notes for neither release 2.0 nor 2.2

15 Mar 11, 06:07:17

Mikhail, I've checked it with rebasing betterparsing branch onto 2.2.2 tag and it shown that changes contianed in the betterparsing are already in 2.2.2 tree. Antirez, please correct me if I'm wrong.

16 Mar 11, 02:50:33

I'm investigating how to turn persistence off in Redis; the documentation is not specific about this but I came across this article and wondered if you could tell me how you turned off the persistence? Thanks.

16 Mar 11, 03:37:13

My last comment was a little premature and I have discovered the comment in the config file that explains how to disable persistence.

06 Apr 11, 20:51:32

First things first: **awesome** article! Kudos!

Next, What happened to speed.redis.io? :) It would be a really cool thing to have.

Cheers!

Next, What happened to speed.redis.io? :) It would be a really cool thing to have.

Cheers!